This text was a conversation with OpenAI’s ChatGPT curated and edited by Crumb to create a simple intro to BERT for classification and Transformers

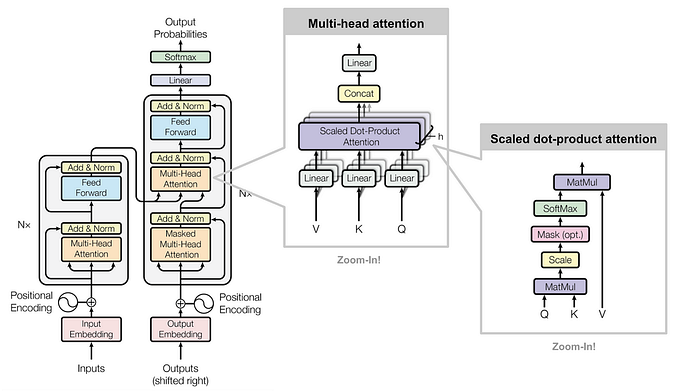

The “Transformers” library is a popular open-source library for natural language processing (NLP) tasks in Python, developed by Hugging Face. It is based on the “Transformer” architecture, which was introduced in the paper “Attention is All You Need” by researchers at Google. The library provides pre-trained models for a wide range of popular NLP tasks, such as language translation, text summarization, and sentiment analysis. It also includes tools for training and fine-tuning transformer models on custom datasets. Overall, the Transformers library is a useful tool for anyone working on NLP tasks in Python, as it makes it easy to use the latest advances in transformer-based models.

Here is an example of how to use the “Transformers” library to perform sentiment analysis on some sample text:

!pip install transformers

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

# Set up the model and tokenizer

model = AutoModelForSequenceClassification.from_pretrained("bert-base-cased-finetuned-mrpc")

tokenizer = AutoTokenizer.from_pretrained("bert-base-cased-finetuned-mrpc")

# Encode the input text

input_text = "This is a great book!"

encoded_input = tokenizer.encode_plus(input_text, add_special_tokens=True, return_tensors="pt")

# Get the model's prediction

prediction = model(encoded_input["input_ids"], encoded_input["attention_mask"])[0]

# Get the predicted label (1 for positive, 0 for negative)

predicted_label = torch.argmax(prediction)

print("The model predicted a {} sentiment for the input text".format("positive" if predicted_label else "negative"))

In this example, we start by installing the “Transformers” library with `pip`. Then we import the necessary classes from the library: `AutoTokenizer` and `AutoModelForSequenceClassification`. We use the `from_pretrained` method of these classes to load a pre-trained model and tokenizer, respectively. In this case, we are using the “bert-base-cased-finetuned-mrpc” model, which is pre-trained on the MRPC (Microsoft Research Paraphrase Corpus) dataset for the task of sentence-level paraphrase detection.

Next, we use the tokenizer to encode the input text as a sequence of tokens. This involves splitting the input text into individual words and adding special tokens to indicate the start and end of the sentence. The encoded input is then passed to the model, which outputs a prediction for the sentiment of the input text. Finally, we use the `torch.argmax` function to get the predicted label (1 for positive, 0 for negative) and print it out.

What if I want to classify the sample text into my own categories?

If you want to classify text into your own custom categories, you can use a technique called transfer learning. Transfer learning involves using a pre-trained model as a starting point, and then training a new model on your own dataset by “freezing” the weights of the pre-trained model and only training a few additional layers on top. This allows you to take advantage of the pre-trained model’s knowledge of general NLP tasks, while still being able to adapt it to your specific classification problem.

Here is an example of how to use transfer learning with the “Transformers” library to train a text classification model on a custom dataset:

!pip install transformers

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification, AdamW

# Set up the model and tokenizer

model = AutoModelForSequenceClassification.from_pretrained("bert-base-cased")

tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

# Freeze the weights of the pre-trained model

for param in model.parameters():

param.requires_grad = False

# Add a new classification layer

num_classes = 5 # Number of classes in your custom dataset

model.classifier = torch.nn.Linear(model.config.hidden_size, num_classes)

# Set up the optimizer

optimizer = AdamW(model.parameters(), lr=1e-5)

# Train the model on your custom dataset

# (see the documentation for examples of how to load and process your dataset)

for epoch in range(10):

train_loss = 0

for input_ids, attention_mask, labels in train_dataloader:

# Forward pass

outputs = model(input_ids, attention_mask, labels=labels)

loss = outputs[0]

# Backward pass

loss.backward()

optimizer.step()

optimizer.zero_grad()

train_loss += loss.item()

print("Epoch: {}, Train loss: {}".format(epoch, train_loss))In this example, we start by installing the “Transformers” library with `pip` and importing the necessary classes. We then use the `from_pretrained` method of the `AutoModelForSequenceClassification` class to load a pre-trained model, and the `from_pretrained` method of the `AutoTokenizer` class to load a corresponding tokenizer.

Next, we freeze the weights of the pre-trained model by setting `requires_grad` to `False` for all of the model’s parameters. This means that the weights of the pre-trained model will not be updated during training.

We then add a new classification layer to the model, with a number of output units equal to the number of classes in your custom dataset. This layer will be trained from scratch on your dataset, while the rest of the pre-trained model remains unchanged.

Finally, we set up an optimizer and train the model on your custom dataset. During training, the classification layer will be updated based on the loss calculated on the training data, while the weights of the pre-trained layers will remain unchanged.

After training, you can evaluate the performance of your model on a validation or test set, and use it to make predictions on new unseen data.

Can you show me how to load examples into `train_dataloader` in that code?

Sure, here is an example of how to load text classification data into a `DataLoader` in the “Transformers” library:

import pandas as pd

from sklearn.model_selection import train_test_split

from torch.utils.data import TensorDataset, DataLoader

# Load the data from a CSV file

df = pd.read_csv("data.csv")

# Split the data into train, validation, and test sets

train_df, test_df = train_test_split(df, test_size=0.2)

valid_df, test_df = train_test_split(test_df, test_size=0.5)

# Encode the input text and labels

train_inputs = tokenizer.batch_encode_plus(train_df["text"], add_special_tokens=True, return_tensors="pt")

train_labels = torch.tensor(train_df["label"].values)

valid_inputs = tokenizer.batch_encode_plus(valid_df["text"], add_special_tokens=True, return_tensors="pt")

valid_labels = torch.tensor(valid_df["label"].values)

test_inputs = tokenizer.batch_encode_plus(test_df["text"], add_special_tokens=True, return_tensors="pt")

test_labels = torch.tensor(test_df["label"].values)

# Create tensor datasets

train_dataset = TensorDataset(train_inputs["input_ids"], train_inputs["attention_mask"], train_labels)

valid_dataset = TensorDataset(valid_inputs["input_ids"], valid_inputs["attention_mask"], valid_labels)

test_dataset = TensorDataset(test_inputs["input_ids"], test_inputs["attention_mask"], test_labels)

# Create data loaders

train_dataloader = DataLoader(train_dataset, batch_size=32, shuffle=True)

valid_dataloader = DataLoader(valid_dataset, batch_size=32, shuffle=False)

test_dataloader = DataLoader(test_dataset, batch_size=32, shuffle=False)In this example, we start by loading the data from a CSV file using the `pandas` library. The CSV file should contain two columns: “text” for the input text, and “label” for the corresponding class labels.

We then split the data into train, validation, and test sets using the `train_test_split` function from the `sklearn` library. This allows us to evaluate the performance of the model on unseen data during training, and to get a final estimate of the model’s performance on the test set after training is complete.

Next, we use the tokenizer to encode the input text and labels as tensors. The encoded inputs include the special tokens added by the tokenizer, as well as the “attention masks” which indicate which tokens are padding and which are actual input tokens. The encoded labels are just regular PyTorch tensors containing the integer class labels.

We then create `TensorDataset` objects for the train, validation, and test sets, which provide a convenient way to access the encoded inputs and labels. Finally, we create `DataLoader` objects for each dataset, which allow us to easily iterate over the data in mini-batches during training.

Once you have created the `DataLoader` objects, you can pass them to the training loop in your model, as shown in the previous example. This will allow the model to train on your custom dataset.

What are some of the safety concerns associated with being able to do this, what should I look out for in deploying one of these models?

When deploying a machine learning model, there are several safety concerns that you should be aware of. Some of these concerns include:

- Bias: Machine learning models can learn and encode biases present in the training data. This can lead to unfair or discriminatory decisions if the model is not trained on a diverse and representative dataset. It is important to carefully evaluate the training data and test the model for biases before deploying it.

- Adversarial examples: Machine learning models can be fooled by carefully constructed inputs, known as adversarial examples, which are designed to cause the model to make incorrect predictions. This can be a security concern if the model is being used in a high-stakes decision-making system, such as a medical diagnostic tool or a self-driving car. It is important to test the model for robustness against adversarial examples and implement defenses against them.

- Privacy: Machine learning models can learn sensitive information from the data they are trained on. This can be a concern if the data contains personal or confidential information, such as medical records or financial transactions. It is important to protect the privacy of individuals in the training data by anonymizing or removing sensitive information before training the model.

Overall, it is important to carefully consider the safety and ethical implications of deploying a machine learning model, and to take steps to mitigate potential risks and ensure that the model is used responsibly.

Follow up note (Crumb here), that felt like a good ending paragraph to the post but I also made it go more in depth about mitigating adversarial examples that I want to share because I think it’s interesting and cool.

CRUMB: Do you have techniques for how to mitigate adversarial examples?

CHATGPT: There are several techniques for mitigating adversarial examples in machine learning models. Some of these techniques include:

Adversarial training: This involves adding adversarial examples to the training data and retraining the model on the augmented dataset. This can make the model more robust to adversarial perturbations, as it has learned to recognize and handle them during training.

Defensive distillation: This involves training a second “student” model to mimic the predictions of the original “teacher” model. The student model is trained using a softened version of the teacher model’s predictions as targets, which makes it more robust to adversarial examples.

Input transformations: This involves applying transformations to the input data, such as blurring or adding noise, before passing it to the model. This can make the input less susceptible to adversarial perturbations, as the perturbations are less effective on the transformed data.

Adversarial training, defensive distillation, and input transformations are all effective techniques for mitigating adversarial examples, but they each have their own trade-offs and limitations. It is important to carefully evaluate the effectiveness and suitability of each technique for a given problem and model.

I found this interesting because I haven’t read much about mitigating adversarial examples, and only knew about adversarial training and input transformations. Defensive distillation is a super cool process that I wouldn’t have thought of. I found a paper about it (https://arxiv.org/pdf/1511.04508.pdf) and will now be reading it.